AI

AI Posts

-

The 'Medium Term' is Next Tuesday: Why Experts Are Missing the Velocity of AI

AI

-

Adaptive Content: When Your Content Responds to How Users Actually Learn

AI Image generated using Gemini’s Nano Banana Pro

Image generated using Gemini’s Nano Banana Pro -

The Story of Your Work: Why 'Letting It Speak for Itself' No Longer Works

AI Image generated using Gemini’s Nano Banana Pro

Image generated using Gemini’s Nano Banana Pro -

The End of Task-Based Knowledge Work: Why We Should Stop Optimizing and Start Orchestrating

AIA reflection on the future of knowledge work from our Z AI Accelerate discussions

-

Mastering the AI Workflow: Why Inaction is the Greatest Risk to Productivity

AILast week, I shared my core principles of the “AI Workflow Mastery: Foundation Course,” an enterprise framework developed for knowledge worker AI adoption. To me, the message is clear: we are standing at a unique and critical juncture in technological and human history, and mastering AI workflows is no longer optional—it is essential for professional relevance. Here are the key takeaways from the presentation, the accompanying report summary, and our path forward.

-

Why AI Would Rather Bluff Than Say I Don't Know And What That Teaches Us About Ourselves

AI

-

How I Use Claude Projects to Plan Family Vacations: A Guide to AI-Powered Travel Planning

AI

-

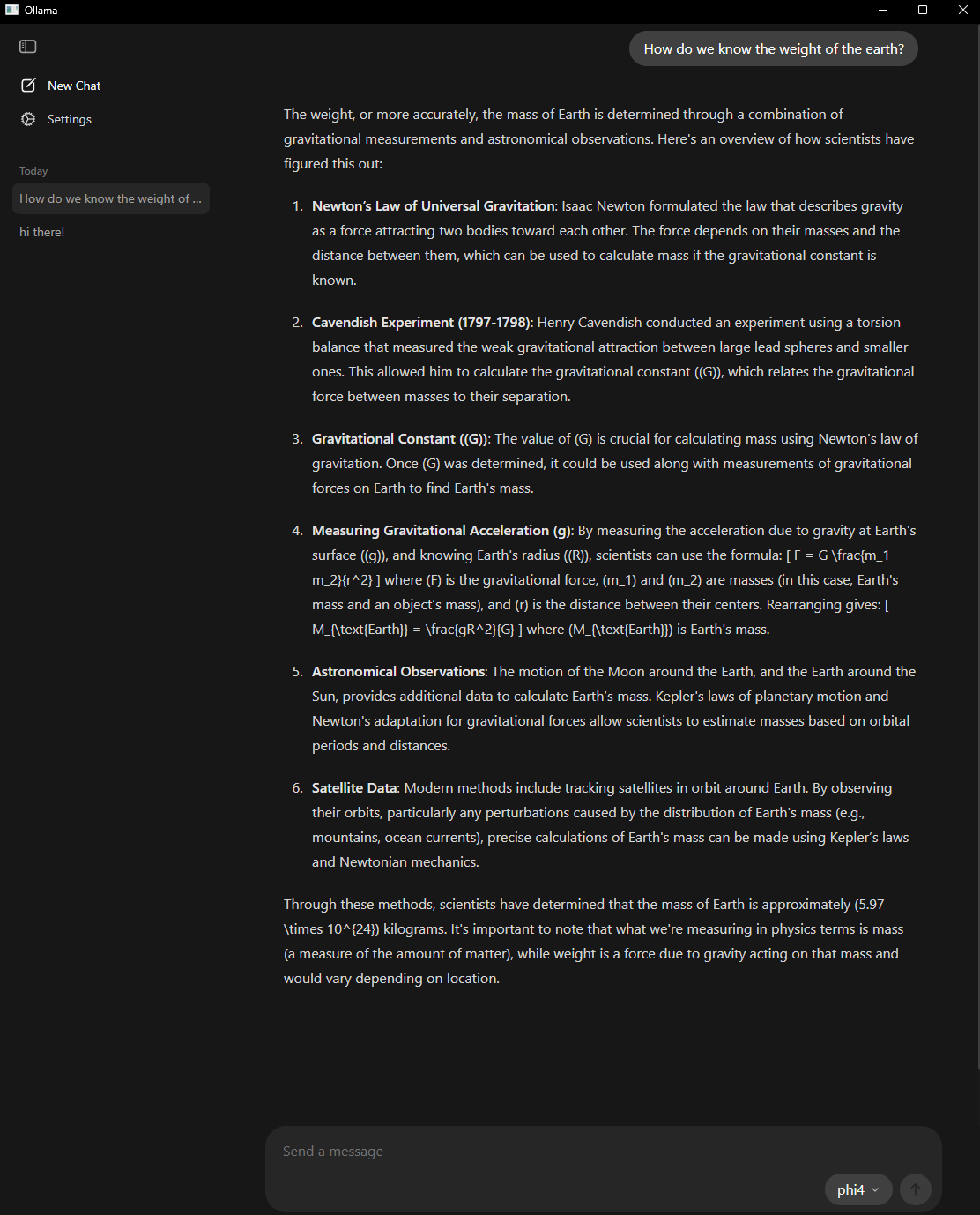

Ollama Takes a Giant Leap Forward with Native Desktop Apps

AI

-

From Content Creation to Knowledge Architecture: What We Learned Building an AI-Native Workflow

AI

-

Building AI Prompts Like Software: A New Frontier in Enterprise Development

AI

-

How I built an AI-powered tool using Cursor and watsonx.ai (without being a full-stack developer)

AITutorialAs a project leader for IBM Redbooks, I spend countless hours reviewing and editing technical content to meet IBM’s writing standards. I’ve always wished for a tool that could automatically apply our style guidelines—active voice, contractions, removing first-person pronouns, and more.

-

The New Entry Point: How AI Is Reshaping the Future of Work and Talent Development

AIThe buzz around AI’s capabilities is everywhere—from generating code to creating presentations, AI tools are transforming how we work. But recently, while listening to IBM’s “Mixture of Experts” podcast, I heard something that made me pause and consider the deeper implications of this technological shift.

-

Taming the AI Editor: A Journey to a Killer Copilot Workflow

AIWe’ve all heard the buzz: AI is set to revolutionize content creation and editing. But how do you move from a general-purpose AI tool to a finely-tuned assistant that understands your specific, demanding editorial guidelines? And more importantly, how do you keep the human editor firmly in control, enhancing their capabilities rather than just hoping for the best from a black box?

-

Vibe Coding in Action: Creating Vibe Write

AIAs a project leader, I’m always looking for ways to improve my development workflow. Recently, I discovered the concept of “vibe coding” through Y Combinator’s Startup School video titled How To Get The Most Out Of Vibe Coding | Startup School featuring Tom Blomfield. The video showcases how Tom spent a month building side projects with tools like Claude Code, Windsurf, and Aqua, demonstrating how modern LLMs can serve as legitimate collaborators in the development process—from writing full-stack apps to debugging with a simple error message paste.

-

Leveraging AI Personas for Comprehensive Document Feedback

AIReceiving timely and relevant feedback is crucial for improving content quality. Recently, a colleague mentioned how beneficial it would be to have “personas on demand” for feedback. This sparked an idea to create a system that could provide detailed evaluations from multiple perspectives. This was a perfect opportunity to use my local LLM setup to provide immediate feedback on a Redbooks publication that was just released yesterday, the IBM z17 Technical Introduction.

-

Creating a Powerful Document Processing App with DocRAG

AIIn today’s data-driven world, managing and processing documents efficiently is crucial. One of the most common challenges is converting PDFs into formats that are easily searchable and integrable with Local Language Models (LLMs). This blog post details how I used Cursor to create an app called DogRAG, which allows you to convert PDFs into markdown, txt, and JSON files for easy Retrieval-Augmented Generation (RAG) using your local LLM system.

-

From PDFs to Personalized AI: Building a Custom RAG System for IBM Redbooks

AITutorialIn the world of enterprise IT, technical documentation is both invaluable and overwhelming. IBM Redbooks, the gold standard for in-depth technical guides on IBM products, contain thousands of pages of expert knowledge. But how do we transform these static PDFs into dynamic, queryable knowledge bases? Today, I’d like to share a journey of building a custom Retrieval-Augmented Generation (RAG) system specifically for IBM technical documentation. This project demonstrates how modern AI techniques can unlock the knowledge trapped in technical PDFs and make it accessible through natural language queries. The Challenge: Unlocking Technical Knowledge IBM Redbooks are comprehensive technical guides, often hundreds of pages long, covering complex systems like IBM Z mainframes, cybersecurity solutions, and enterprise storage. These documents are treasure troves of information but present several challenges:

-

Google AI Mode vs. Perplexity: A Comparison for Flask Documentation

AIGoogle recently launched their experimental AI Mode in Search, and as someone working on a BeeAI framework project with Flask as a front end, I wanted to compare how Google’s new offering stacks up against Perplexity when searching for Flask information.

-

Building a Recipe Creator with BeeAI Framework: A Comprehensive Tutorial

PythonAITutorialIn this tutorial, we’ll build a practical multi-agent system using the BeeAI framework that can create recipes based on user-provided ingredients. Our Recipe Creator will demonstrate how specialized agents can work together to accomplish a complex task.

-

From Idea to Implementation: Building a Recipe Creator with BeeAI - A Development Journey

PythonAITutorialIn this tutorial, I’ll share the iterative process of developing a Recipe Creator application using the BeeAI framework. Rather than presenting a polished, final product, I want to walk through the actual development journey that Claude (my AI coding partner) and I embarked on together. We practiced what I like to call “vibe coding” - a collaborative process where I guided the conceptual direction while Claude helped implement and troubleshoot the technical details. This post highlights the challenges we faced, the solutions we discovered, and the insights we gained along the way as a human-AI coding team.

-

Navigating the AI Wave: Career Choices and the Future of Knowledge Work

AIIn a recent interview on Hardfork, hosted by Kevin Roose and Casey Newton, Dario Amodei from Anthropic shared some profound insights about the future of work in the age of AI. As someone who creates content by interacting with engineering teams at IBM Redbooks, I found his perspectives both enlightening and somewhat unsettling.

-

Unveiling the Magic: How Large Language Models Handle Conversations

AIWhen I first started interacting with large language models (LLMs) like those powering Claude and Gemini, I was struck by how seamlessly they maintained context in conversations. It felt as if the model remembered our entire exchange, allowing for a natural back-and-forth dialogue. However, this perception is an illusion—one that masks a fascinating mechanism behind the scenes.

-

Running OpenWebUI with Podman: A Corporate-Friendly LLM Setup

TutorialAIWhile setting up local LLMs has become increasingly popular, many of us face restrictions on corporate laptops that prevent using Docker. Here’s how I successfully set up OpenWebUI using Podman on my IBM-issued MacBook, creating a secure and IT-compliant local AI environment.

-

The Productivity Dilemma: Why More Speed Isn't Always the Answer

AILately, I’ve been watching some videos from Matthew Berman and I’ve realy enjoyed them. They’re great deep dives into various AI topics.