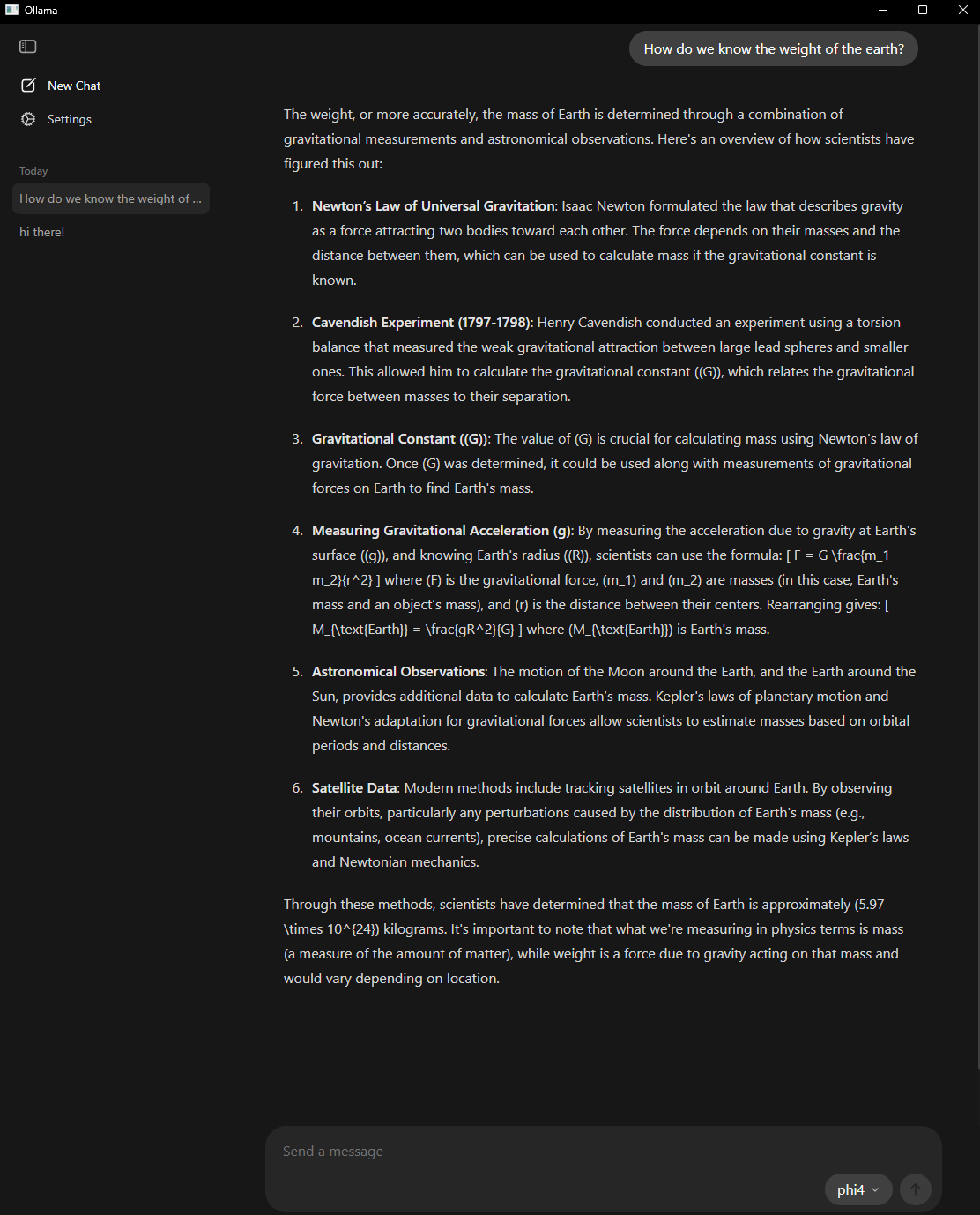

Ollama Takes a Giant Leap Forward with Native Desktop Apps

The local AI landscape just got significantly more accessible. Ollama has released native desktop applications for macOS and Windows, bringing enterprise-grade local language models directly to your desktop with an intuitive chat interface. This development marks a pivotal moment for anyone looking to harness AI capabilities while maintaining data sovereignty.

Why This Matters for Enterprise Users

Until now, running Ollama models locally meant either mastering command-line interfaces (CLIs) or wrestling with Open WebUI’s complex setup process. While Open WebUI offers extensive configurability and advanced features, it can require Docker knowledge, API knowledge, port management, and often troubleshooting configuration issues. Ollama’s new native apps take the opposite approach by prioritizing simplicity and immediate usability over extensive customization options, making local AI as straightforward as launching any desktop application.

For enterprise environments where data privacy and control are paramount, this represents a game-changing development. Teams can now leverage powerful language models without sending sensitive information to external APIs or cloud services.

The Trade-offs: Simplicity vs. Customization

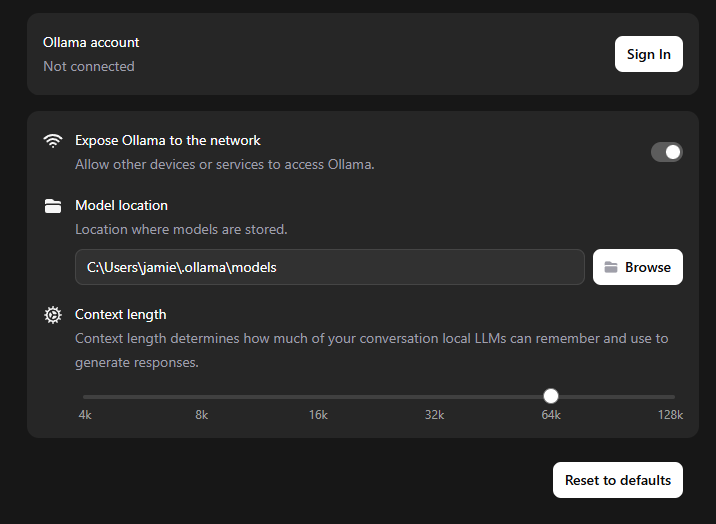

This streamlined approach does come with limitations. Currently, the native app appears to lack system prompt customization, a feature that power users rely on for defining specific AI behavior and context. While this may be added in future releases, users who need extensive prompt engineering capabilities might still need to rely on Open WebUI or CLIs for now.

If you need maximum configurability, complex multi-user setups, or advanced prompt management, Open WebUI remains the better option despite its complexity. But if you want to quickly start using local AI models without configuration overhead, Ollama’s native app is transformative.

Key Features That Stand Out

Integrated Chat Interface: The most obvious improvement is the built-in chat window. No more terminal commands or browser-based interfaces – just download, install, and start conversing with your models.

File Processing Capabilities: The drag-and-drop functionality for text files and PDFs opens up immediate practical applications. Whether you’re analyzing technical documentation, processing reports, or reviewing code, the workflow becomes seamless.

Configurable Context Length: Perhaps most importantly for complex enterprise use cases, users can adjust context length in the settings. This means handling larger documents and maintaining longer conversation threads, though it comes with the expected memory trade-offs.

Multimodal Support: Built on Ollama’s enhanced multimodal engine, the app supports image analysis with compatible models like Google DeepMind’s Gemma 3. This expands use cases into visual document analysis and technical diagram interpretation.

Practical Implications

The file processing capabilities particularly shine for technical documentation work. Code files can be directly analyzed by models, making this an excellent tool for:

- Code review and documentation generation

- Technical writing assistance

- System architecture analysis

- Legacy code understanding

The ability to process large documents with adjustable context length means you can feed entire technical specifications or lengthy reports into the system for analysis and summarization.

Getting Started

The new Ollama desktop apps are available for immediate download on both macOS and Windows. For users who prefer the CLI or need to deploy in headless environments, standalone CLI versions remain available through Ollama’s GitHub releases.

Looking Forward: The Rollout Revolution

This release is a potential solution to one of the biggest challenges facing technical teams adopting AI: the gap between individual success and team-wide implementation.

In my recent experience rolling out AI workflows to a technical team, we discovered that individual AI wins often create more problems than they solve at scale. Brilliant prompting strategies developed by one team member became undocumented tribal knowledge that couldn’t transfer. Personal setups that worked perfectly for early adopters failed for others with different technical environments or comfort levels. We were creating new silos faster than we could break down old ones.

Ollama’s native app addresses several of these core scaling challenges directly. The streamlined setup eliminates the technical barrier that prevented half our team from even getting started. The consistent interface means training can focus on effective prompting rather than troubleshooting Docker containers or managing port configurations. Most importantly, it provides a standardized foundation that individual solutions can build upon rather than diverge from.

For teams wrestling with the “AI literacy challenge” (the gap between knowing AI exists and knowing how to use it effectively) this kind of accessible tooling is transformative. When you’re asking experts with decades of experience to trust probabilistic systems for first drafts, every friction point in the setup process becomes a reason to defer adoption. The combination of ease of use, powerful local processing, and enterprise-friendly privacy makes this a significant milestone in making AI accessible to broader organizational contexts. For technical teams trying to move from individual experimentation to scalable workflows, tools like this could be the difference between transformation and endless pilot projects.