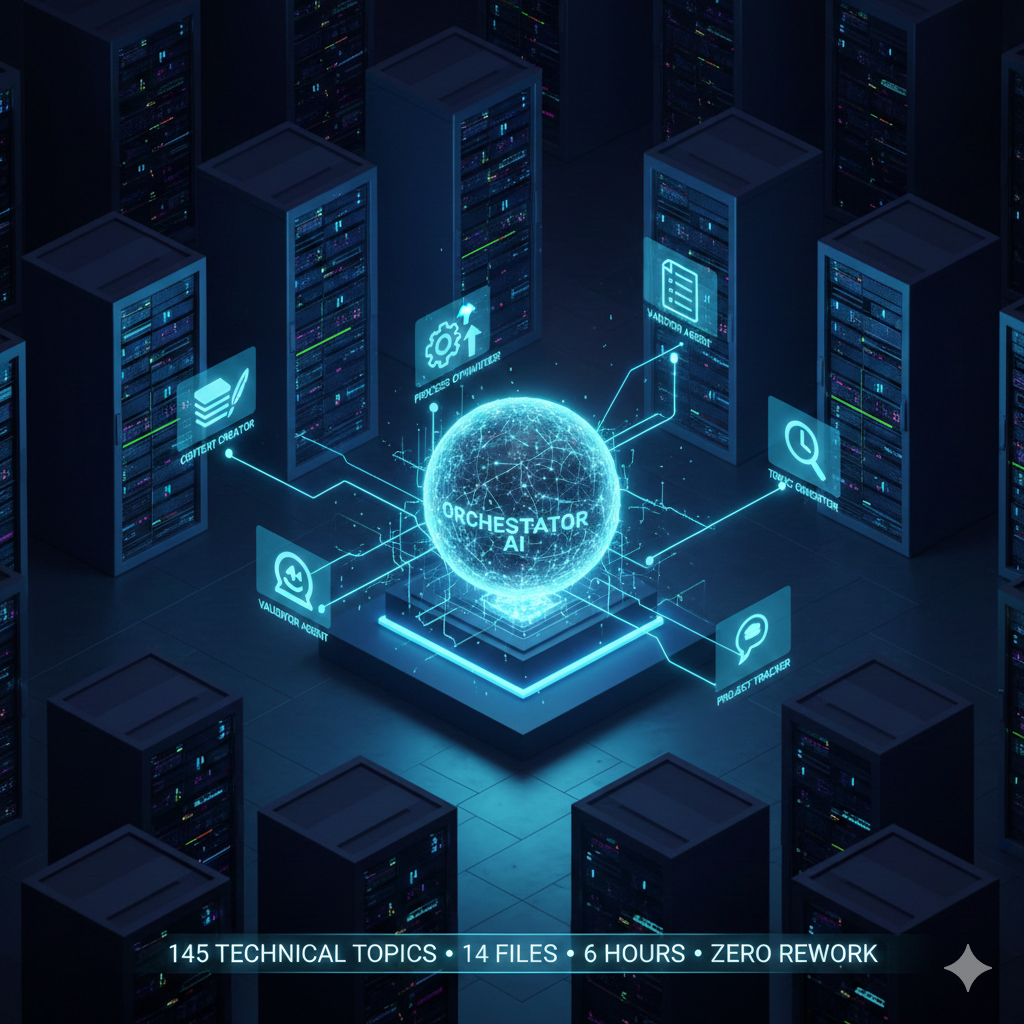

The Power of Orchestration: How I Generated 145 Technical Topics in 6 Hours Using Multi-Agent AI Workflows

Image generated used Gemini’s Nano Banana Pro

Image generated used Gemini’s Nano Banana Pro

“Generate 14 interconnected files with 145 topics covering enterprise AI. Maintain consistency. Ensure accurate cross-references. Complete it in hours, not weeks.”

That was the task. And for the first time, I used Project Bob’s Orchestrator mode to coordinate the whole thing. The results were, frankly, fantastic.

This post explores what I learned about orchestrating AI agents, why it represents a fundamental shift in AI-assisted content creation, and how you can start using this approach yourself.

The Scale Challenge

Large-scale content generation faces a critical problem: maintaining quality and consistency across hundreds of pages. The traditional approaches all fall short.

Manual creation takes weeks to months. Consistency is difficult to maintain across that timespan, cross-references become error-prone, and scalability is limited by human capacity.

Single AI agents hit context limits. They can’t hold an entire project in memory. Quality degrades over long sessions as “consistency drift” sets in. Mistakes compound. And a generalist agent applies one-size-fits-all thinking to specialized problems.

Neither approach scales while maintaining quality. Manual work is too slow; single agents lack context and specialization.

The solution? Orchestrated multi-agent workflows: a project manager AI coordinating specialized AI agents across focused sessions.

The Orchestration Architecture

The approach uses a two-tier model:

Tier 1: The Orchestrator acts as project manager. It breaks projects into manageable sessions, delegates to appropriate specialists, tracks progress, and validates before proceeding.

Tier 2: Specialized Agents focus on specific deliverables. They apply domain expertise, follow templates, validate outputs, and report completion.

Orchestrator (Project Manager)

│

├── Session 1 Specialist

├── Session 2 Specialist

├── Session 3 Specialist

└── Session N Specialist

Each session follows a consistent pattern:

- Delegation: Clear instructions, context, references

- Execution: Generate content following templates

- Validation: Check against quality criteria

- Reporting: Completion with metrics

- Review: Verify before next session

The key principles: clear session boundaries, complete context provided upfront, incremental validation, consistent templates, progress visibility, and flexible adaptation.

Real-World Results

Here’s what we actually accomplished.

The Challenge:

- 14 files across 2 publications

- 145 topics total

- 370+ pages

- 5 personas served

- Multiple cross-references

The Approach: Six Sessions

For Publication 2 (a Developer Guide):

- Session 1: Executive summary + Chapter 1 (2 files, 17 topics)

- Session 2: Chapters 2-3 (2 files, 29 topics)

- Session 3: Chapters 4-5 + Validation (3 files, 27 topics)

For Publication 3 (a Governance Guide):

- Session 4: Executive summary + Chapter 1 (2 files, 17 topics)

- Session 5: Chapters 2-3 (2 files, 31 topics)

- Session 6: Chapters 4-5 + Validation (3 files, 24 topics)

The Results:

| Metric | Result |

|---|---|

| Files completed | 14/14 (100%) |

| Topics generated | 145/145 (100%) |

| Pages produced | 370+ |

| Content reuse potential | 88% |

| Cross-references validated | 36 |

| Total time | ~6 hours |

| Rework required | Zero |

Compare that to the alternatives:

| Metric | Manual | Single Agent | Orchestrated |

|---|---|---|---|

| Time | 4-6 weeks | 2-3 days | 6 hours |

| Consistency | Variable | Degrades | Maintained |

| Cross-refs | Error-prone | Difficult | Validated |

| Completion | 100% | 60-80% | 100% |

The success factors were clear: session design kept scope manageable (2-3 files each), instructions were explicit, validation happened continuously, templates from Publication 1 served as references, and todo lists maintained visibility throughout.

Seven Key Advantages

1. Scale Without Quality Loss

Long sessions cause context drift and inconsistency. With orchestration, each session starts fresh with complete context. Templates ensure consistency. Validation prevents error propagation.

Session 3 generated 3 files with 27 topics while maintaining perfect consistency with Sessions 1-2.

2. Specialization and Expertise

Generalist agents lack focused expertise. With orchestration, different modes handle different tasks. Specialists apply where needed. Quality improves through specialization.

3. Transparent Progress Tracking

Long sessions provide no visibility into what’s happening. With orchestration, todo lists show progress. Each session reports metrics. Stakeholders see incremental results.

“4 of 6 sessions complete, 67% done” provides clear status that builds confidence.

4. Incremental Validation

End-stage validation requires extensive rework when issues are found. With orchestration, validation happens after each session. Issues get caught immediately. Quality is tracked throughout.

Each session included validation summaries showing topic counts, persona alignment, and reuse potential.

5. Flexible Adaptation

Rigid processes can’t adapt to changes. With orchestration, session size is adjustable. Requirements can be refined between sessions. Learning applies forward.

I adjusted session sizes based on complexity: 2 files for simple content, 3 when including validation passes.

6. Parallel Potential

Sequential approaches limit throughput. With orchestration, independent sessions can run concurrently. Multiple specialists work simultaneously. Throughput scales.

Sessions 1 and 4 (different publications) could have run in parallel. Something to try next time.

7. Reusability and Learning

Each project typically starts from scratch. With orchestration, session patterns become templates. Successful approaches get documented. Efficiency improves over time.

The session delegation template I developed is now reusable for similar projects.

Practical Implementation

Good Candidates for Orchestration

This approach works best when you have:

- 10+ interconnected files

- 50+ topics or components

- Multiple content types

- Consistency requirements

- Cross-referencing needs

It’s probably overkill for single files, content with no interdependencies, minimal consistency needs, or one-time simple tasks.

Design Your Sessions

Follow these principles:

- Manageable scope: 2-3 files per session

- Logical grouping: Related content together

- Clear dependencies: Foundation first

- Validation points: End of each phase

- Natural breakpoints: Align with structure

Here’s an example structure:

Phase 1: Foundation

Session 1: Overview + Chapter 1

Phase 2: Core Content

Session 2: Chapters 2-3

Session 3: Chapters 4-5

Phase 3: Completion

Session 4: Final chapters + Validation

Prepare Your Context

Essential elements to gather upfront:

- Project overview

- Templates and guides

- Reference examples

- Previous outputs

- Quality criteria

- Success metrics

Execute and Validate

The pattern for each session:

- Delegate with clear instructions

- Wait for completion

- Review outputs

- Validate requirements

- Update progress

- Proceed to next

Validation checklist:

- ✓ All files generated

- ✓ Topic counts on target

- ✓ Quality metrics met

- ✓ Cross-references validated

- ✓ Consistency maintained

Key Lessons Learned

Session size matters. 2-3 files per session is optimal for complex content. Adjust based on complexity, not just count.

Context is king. Complete context upfront dramatically improves quality. List all references explicitly. Include previous outputs.

Validation prevents rework. Checking after each session catches issues immediately. Never skip validation.

Templates enable consistency. Having reference templates ensures consistent structure. Reference them explicitly in every session.

Progress visibility builds confidence. Todo lists showing completion give stakeholders confidence. Update regularly. Transparency builds trust.

Specialization improves quality. Using specialized modes produces better results. Match specialists to tasks.

Orchestration scales. The approach worked equally well for 2-file and 3-file sessions. The pattern scales effectively.

The Future of AI Orchestration

Near-Term (6-12 months)

Parallel Execution: Multiple sessions running simultaneously for dramatic time reduction.

Automated Orchestration: AI orchestrators that plan automatically with dynamic session sizing and self-optimizing workflows.

Specialized Libraries: A growing ecosystem of specialists with domain-specific expertise and plug-and-play agents.

Quality Automation: Automated validation, real-time consistency monitoring, and predictive quality metrics.

Long-Term (1-3 years)

Autonomous Management: AI orchestrators managing entire projects with human oversight rather than hands-on control.

Cross-Project Learning: Patterns applied across projects with accumulated expertise and continuously improving efficiency.

Hybrid Teams: Humans and AI collaborating seamlessly, each doing what they do best, with multiplicative productivity.

The Paradigm Shift

We’re moving from:

- “AI as tool” → “AI as team member”

- “Single agent” → “Coordinated specialists”

- “Manual orchestration” → “Automated coordination”

- “One-off tasks” → “Reusable workflows”

Orchestration is a fundamental shift in how we work with AI, moving from using AI to collaborating with AI.

Getting Started

Your First Project

Start small:

- 5-10 file project

- 3-4 sessions

- Existing templates

- Focus on learning

Essential setup:

- Define scope

- Identify references

- Create session plan

- Prepare context

- Set up tracking

First session checklist:

- Clear delegation message

- All references listed

- Requirements defined

- Success criteria set

- Validation plan ready

Learn and iterate:

- Review results

- Adjust approach

- Document learnings

- Build templates

- Share with team

Conclusion

I generated 14 files with 145 topics in 6 hours with 100% completion, perfect consistency, 88% reuse potential, zero rework, and validated quality.

But the real achievement isn’t the output. It’s the approach.

Orchestration represents a shift:

- From single agents struggling to coordinated specialists excelling

- From opaque processes to transparent progress

- From one-size-fits-all to specialized expertise

- From end-stage validation to continuous quality

The future isn’t about more powerful single agents. It’s about better orchestration of specialists. It’s coordination, not just capability.

The question isn’t whether to orchestrate. It’s how quickly you can start.

A note on process: I developed this post in conversation with Claude, Anthropic’s AI assistant. The ideas are mine, but Claude helped me connect them, pressure-test the argument, and draft the prose. The session itself was a back-and-forth: I’d push back, ask for revisions, add my own examples, and Claude would adapt. Less like querying a search engine, more like working with a digital colleague. There’s something fitting about using an AI tool to write about the disruption AI is causing. I stayed in the driver’s seat. This is me, speaking for my work.